I’ve had the opportunity to work with a few different infrastructure automation tools such as Puppet, Chef, Heat and CloudFormation but Ansible just has a simplicity to it that I like, although I admit I do have a strong preference for Puppet because i’ve used it extensively and have had good success with it.

In one of my previous project I was creating a repeatable solution to create a Docker Swarm cluster (before SwarmKit) with Consul and Flocker. I wanted this to be completely scripted to I climbed on the shoulders of AWS, Ansible and Docker Machine.

The script would do 4 things.

- Initialize a security group in an existing VPC and create rules for the given setup.

- Create machines using Docker-Machine of Consul and Swarm

- Use AWS CLI to output the machines and pipe them to a python script that processes the JSON output and creates an Ansible inventory.

- Use the inventory to call Ansible to run something.

This flow can actually be used fairly reliable not only for what I used it for but to automate a lot of things, even expand an existing deployment.

An example of this workflow can be found here.

I’m going to focus on steps #3 and #4 here. First, we use the AWS CLI to output machine information and pass it to a script.

# List only running my-prefix* nodes $ aws ec2 describe-instances \ --filter Name=tag:Name,Values=my-prefix* \ Name=instance-state-code,Values=16 --output=json | \ python create_flocker_inventory.py

We use the instance-state-code of 16 as it corresponds with Running instances. You can find more codes here: http://docs.aws.amazon.com/AWSEC2/latest/APIReference/API_InstanceState.html. Then we choose JSON output using –output=json.

Next, the important piece is the pipe ( `|` ). This signifies we pass the output from the command on the left of the | to the command on the right which is create_flocker_inventory.py so that the output is used as input to the script.

So what does the python script do with the output? Below is the script that I used to process the JSON output. It first setups up an _AGENT_YML variable that contains YAML for a configuration then the main() function takes the JSON from json.loads() in the script initialization and creates an array of dictionaries that represent instances and opens a file and writes each instance to the Ansible inventory file called “ansible_inventory”. After that the “agent.yml” is written to a file along with some secrets from the environment.

import os

import json

import sys

_AGENT_YML = """

version: 1

control-service:

hostname: %s

port: 4524

dataset:

backend: aws

access_key_id: %s

secret_access_key: %s

region: %s

zone: %s

"""

def main(input_data):

instances = [

{

u'ip': i[u'Instances'][0][u'PublicIpAddress'],

u'name': i[u'Instances'][0][u'KeyName']

}

for i in input_data[u'Reservations']

]

with open('./ansible_inventory', 'w') as inventory_output:

inventory_output.write('[flocker_control_service]\n')

inventory_output.write(instances[0][u'ip'] + '\n')

inventory_output.write('\n')

inventory_output.write('[flocker_agents]\n')

for instance in instances:

inventory_output.write(instance[u'ip'] + '\n')

inventory_output.write('\n')

inventory_output.write('[flocker_docker_plugin]\n')

for instance in instances:

inventory_output.write(instance[u'ip'] + '\n')

inventory_output.write('\n')

inventory_output.write('[nodes:children]\n')

inventory_output.write('flocker_control_service\n')

inventory_output.write('flocker_agents\n')

inventory_output.write('flocker_docker_plugin\n')

with open('./agent.yml', 'w') as agent_yml:

agent_yml.write(_AGENT_YML % (instances[0][u'ip'], os.environ['AWS_ACCESS_KEY_ID'], os.environ['AWS_SECRET_ACCESS_KEY'], os.environ['MY_AWS_DEFAULT_REGION'], os.environ['MY_AWS_DEFAULT_REGION'] + os.environ['MY_AWS_ZONE']))

if __name__ == '__main__':

if sys.stdin.isatty():

raise SystemExit("Must pipe input into this script.")

stdin_json = json.load(sys.stdin)

main(stdin_json)

After this processes the JSON from the AWS CLI, all that remains is to run Ansible with our newly created Ansible inventory. In this case, we pass the inventory and configuration along with the ansible playbook we want for our installation.

$ ANSIBLE_HOST_KEY_CHECKING=false ansible-playbook \

--key-file ${AWS_SSH_KEYPATH} \

-i ./ansible_inventory \

./aws-flocker-installer.yml \

--extra-vars "flocker_agent_yml_path=${PWD}/agent.yml"

Conclusion

Overall this flow can be used along with other Cloud CLI tools such as Azure, GCE etc that can output instance state that you can pipe to a script for more processing. It may not be the most effective way but if you want to get a semi complex environment up and running in a repeatable fashion for development needs it has worked pretty well to follow the “pre-setup_get-output_prcocess-output_install_config” flow outlined above.

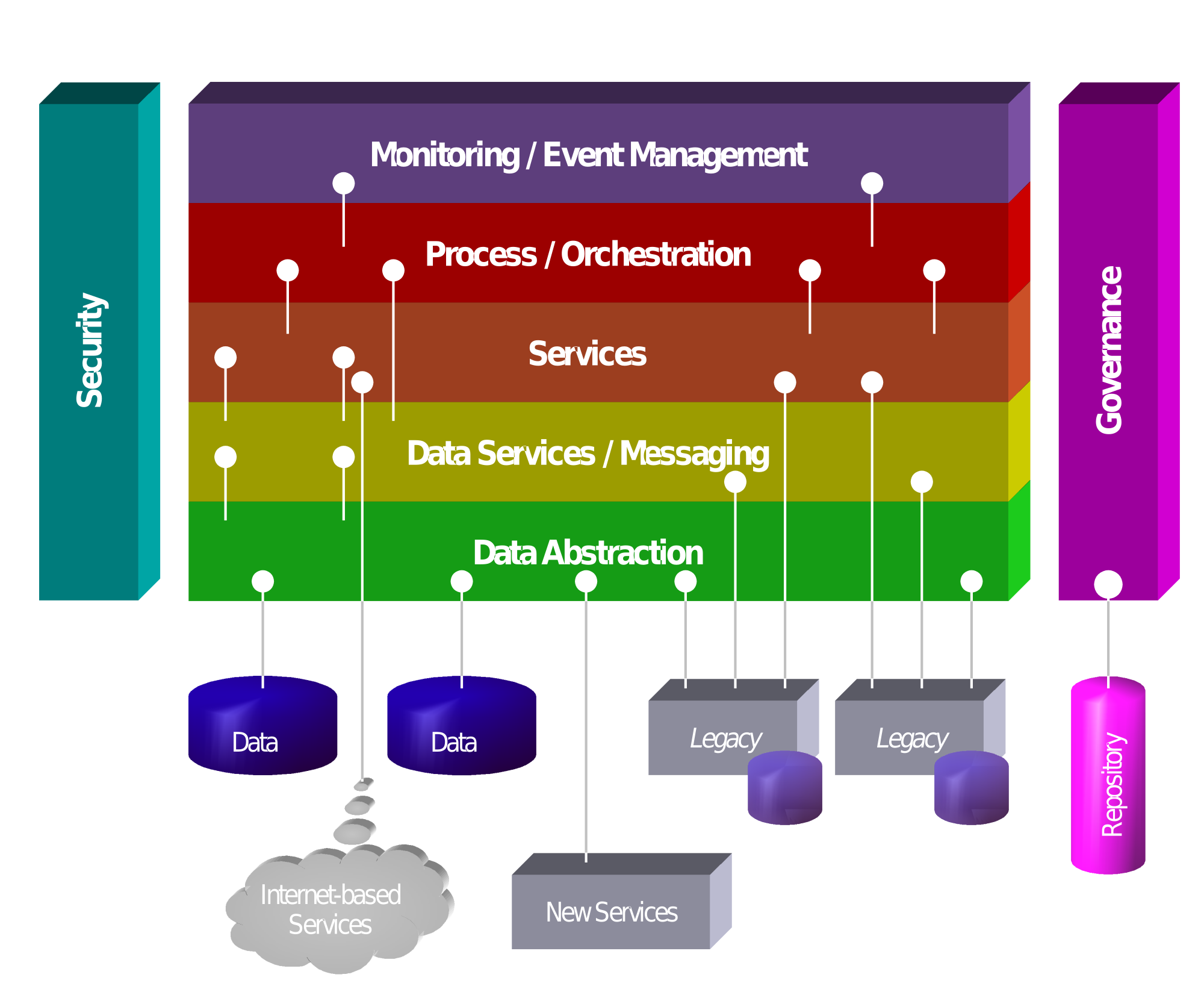

ils / Ruby code to implement smaller services that make up the site, and in some cases this is where we can go wrong, if it works, don’t break it. Other times, go ahead, break out smaller services and deploy them, but start small, think of it like the process of an amoeba splitting 🙂

ils / Ruby code to implement smaller services that make up the site, and in some cases this is where we can go wrong, if it works, don’t break it. Other times, go ahead, break out smaller services and deploy them, but start small, think of it like the process of an amoeba splitting 🙂